Is DeepSeek an Nvidia killer?

Reports of my death are greatly exaggerated - Nvidia

It’s been a crazy couple of days in the world of AI and markets.

I don’t want to, and I haven’t checked my Robinhood account.

But after a couple of days of thought, I think it’s all an overreaction.

I had to write an article about this big topic for my weekly newsletter (in Spanish: you should really subscribe). After researching, I feel ready to share my early thoughts with you.

So, let’s get to it. I’ll try to answer three questions:

What is DeepSeek, and did this company really create a giant leap in AI?

Are OpenAI, Meta, and others in trouble?

What other implications could this have in the new global race for AGI?

Let’s dive in.

What is DeepSeek?

DeepSeek is a Chinese artificial intelligence company that develops chatbot-type models.

Let me repeat this to clarify my point: DeepSeek is a Chinese AI company whose goals align with China’s strategic objectives.

Without delving into the details of the CEO and the team’s history, let’s explore what sets DeepSeek’s R1 model apart from OpenAI’s models, Claude, and Llama.

Current large language models (LLMs) operate based on predicting what comes next in a sequence of text. Isn’t that the same as your regular LLM? Yes and no. (For simplicity, I’ll use OpenAI as the primary example from now on.)

Feel free to ask ChatGPT how an LLM works, or watch this video for a quick overview.

How Does DeepSeek Achieve Cost Reduction?

How were they able to lower costs by 25x-30x?

This X thread is the best explainer of DeepSeek I’ve seen.

But long story short, they made two significant breakthroughs in LLM optimization.

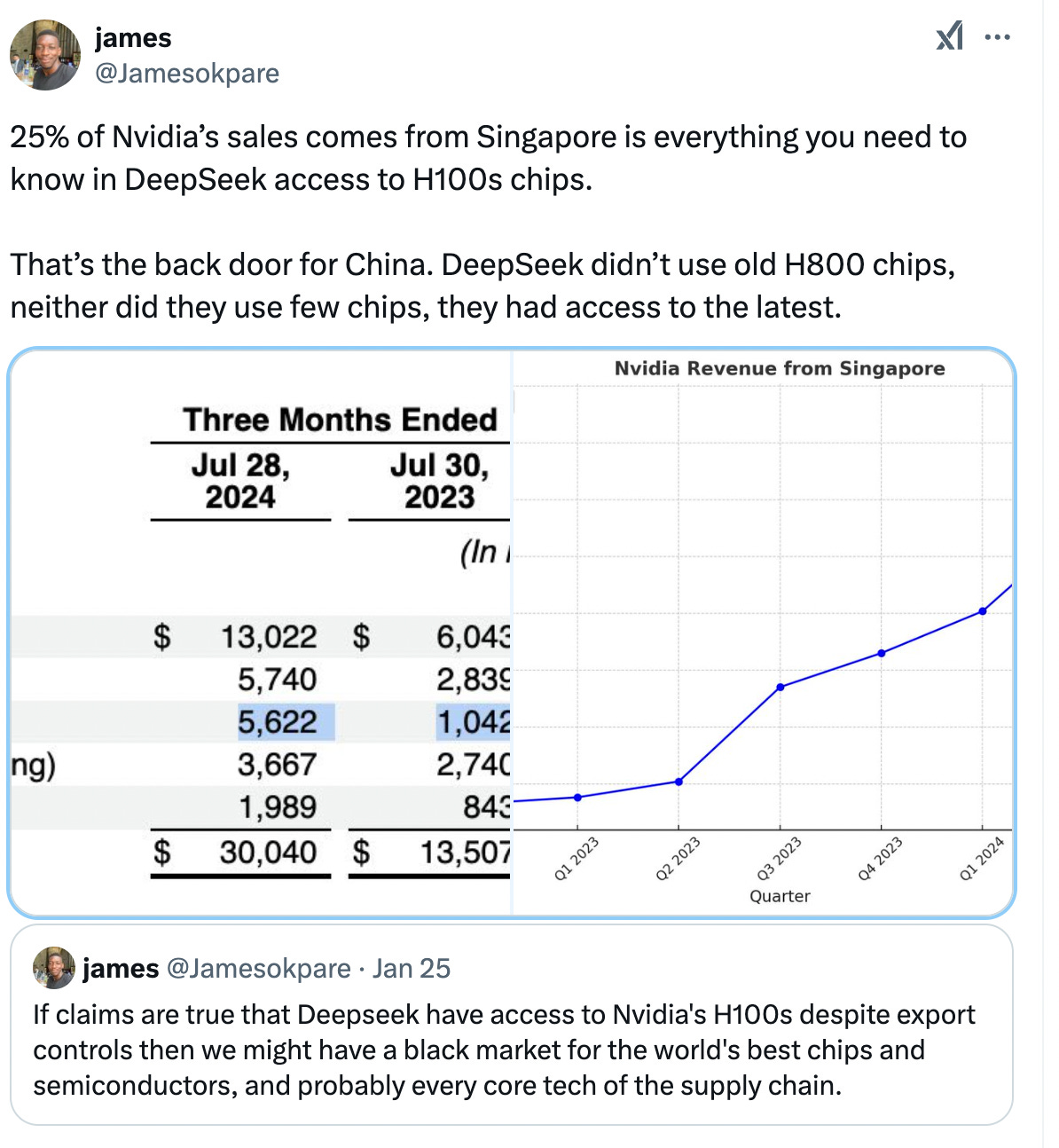

Both come from the restrictions DeepSeek has as a Chinese company. The previous Trump and Biden administrations set up bans on chip sales in China.

Due to these constraints, DeepSeek had to use older versions of Nvidia AI chips. In particular, they used H-100s.

That is if we believe they haven’t had access to other chips. But that’s for another day.

So what did DeepSeek do? Instead of creating an LLM from scratch, they made their model by training it on existing LLMs and optimizing the use of chips.

In that process, they were able to simplify some calculations and weights; they also tested it against O1, optimizing specifically on math and coding problems.1

Are Nvidia, OpenAI, Meta, and others cooked?

The short answer is no.

It’s too early in the AI game, and calling a winner less than a week after they get well-known is too early.

Losing in this market is going bankrupt. None of these players seem to be even close to that.

The other thing is that DeepSeek needs other models to innovate and exist, at least for now.

DeepSeek's choosing to be open-source allows competitors to catch up in efficiency. This could lead to faster wins for incumbents like OpenAI.

So, what company benefits from DeepSeek’s existence?

Apple.

Why?

With this level of efficiency, running LLMs locally is now feasible.

This means Siri (sorry, Apple Intelligence) should be able to run on an iPhone without hiccups.

This means financial alpha could be in the app layer, and you know who has the advantage with their 30% fee app store → Apple.

Other startups could end up being the biggest winners. More competition at the LLM model means cheaper usage of AI and a focus set on the design and capture of customers at the local level.

Jevons Paradox

Finally, for Nvidia, even in the worst-case scenario, if AI training can be done more cheaply, they could win.

Jevons paradox, as Satya Nadella (Microsoft’s CEO) claims, is that when something becomes commoditized and cheaper (like fuel), it increases the use of the said product.

In this example, as LLMs are more efficient in their use of chips, they actually increase their use. More chips → better results for Nvidia.

Geopolitical consequences

As Mark Andreessen claimed in his X post yesterday, is this the Sputnik moment for the China-US AI war?

It’s the first real threat to the AI advantage the US ecosystem thought it had.

The release of DeepSeek coincided with the TikTok ban taking place (for a while) and the announcement of Project Stargate.

As mentioned earlier, Nvidia could have more eyes on how they implement the export restrictions due to the Singapore sales.

On a local note, the DeepSeek news brings two losers.

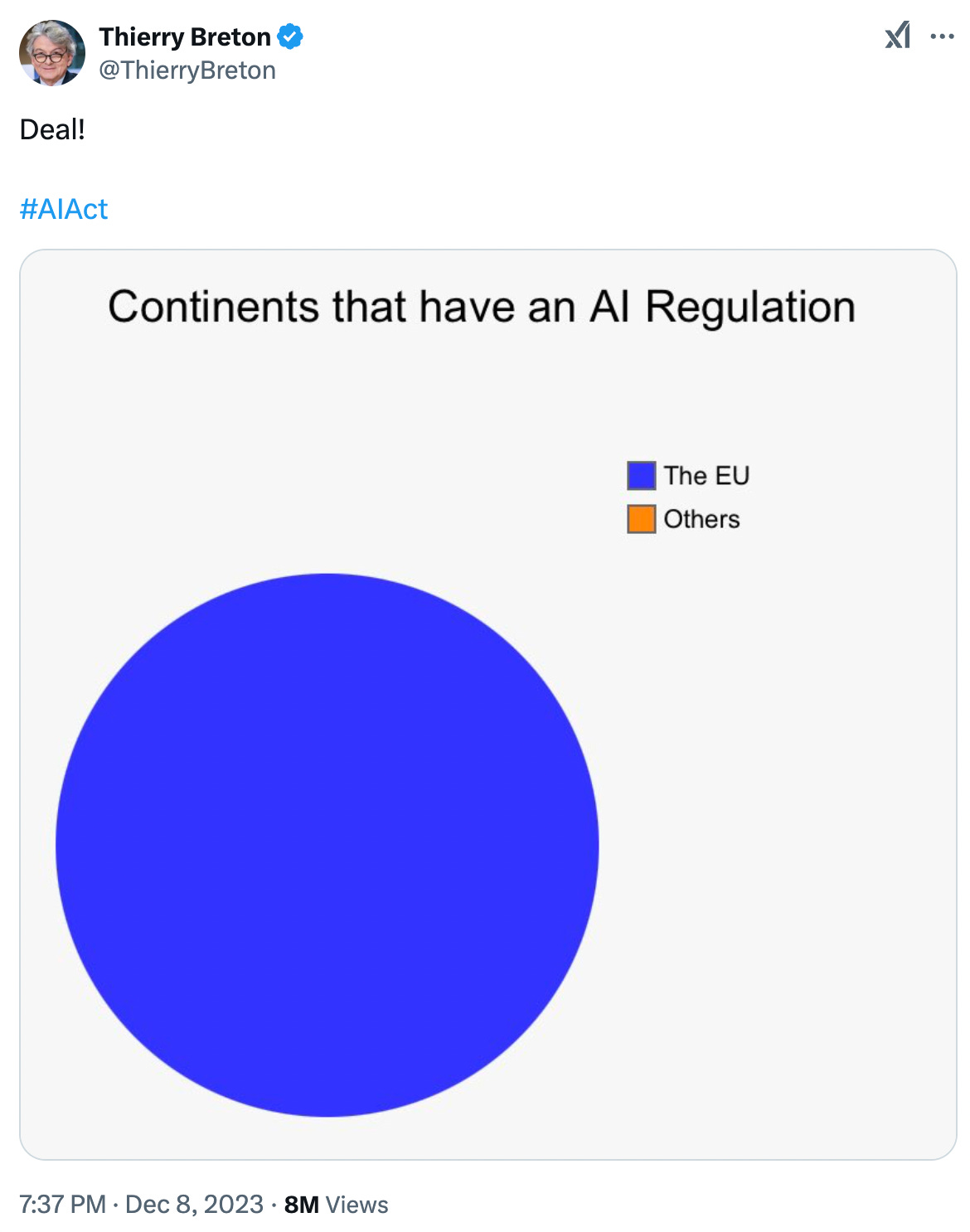

The EU, which has regulated itself out of AI markets.

AI Alarmists.

I’m sure this will bring AGI sooner and release pressure from AI alarmists, as the geopolitical impact will be considered a higher threat. Regulation will be pushed back.

Governments in general, and this administration in particular, will not be burdened by pressure from AI alarmists in the middle of an AI race that resembles a Cold War existential threat.

DeepSeek Unleashed: Stirring the AI Pot

It’s still too early to distill what will happen with AI, especially after we hear about more releases from Chinese companies. But, as a framework, we can start considering the positive impact this could have on companies like Nvidia and analyze how other companies adjust.

OpenAI is an innovative company, and it could pivot quickly into its Operator product-type products.

For further analysis of the dynamics between China and the US, I recommend a few episodes of the Technology Brothers podcast on DJI (drones) and DeepSeek (two episodes: 1, 2.)

Subscribe to get my thoughts in the following link:

The training cost in their white paper does not account for the use and buying of chips or the initial training of OpenAI’s models. So, no—it’s not 25x cheaper if you find a way to use other models inexpensively. The $5M figure is not very credible.